We often take for granted the idea that Kotlin Coroutines are better than Threads in terms of performance. I decided to put this hypothesis to test by implementing a real-world benchmark and see the actual metrics. In this post, I’ll summarize my findings, which, I guess, might surprise some of the readers.

Coroutines vs Threads Benchmark

To compare the performance of Kotlin Coroutines and standard Threads I implemented a new benchmark in my open-sourced TechYourChance application.

The basic idea behind this benchmark is to launch many background tasks sequentially and, for each task, measure the time between a call that starts the task and the first instruction inside it. This duration, which I call “startup duration”, is basically the latency associated with transferring some work to the background.

The benchmark exercises three different background work mechanisms:

- A new bare Thread is created and started for each task.

- A new Coroutine is launched for each task.

- Tasks are submitted to a single thread pool for execution.

Obviously, the results of this benchmark will vary between different devices. If you’d like to run the benchmark yourself, then just install the TechYourChance app on your own device (see the installation instructions on GitHub).

Coroutines vs Threads Startup Results

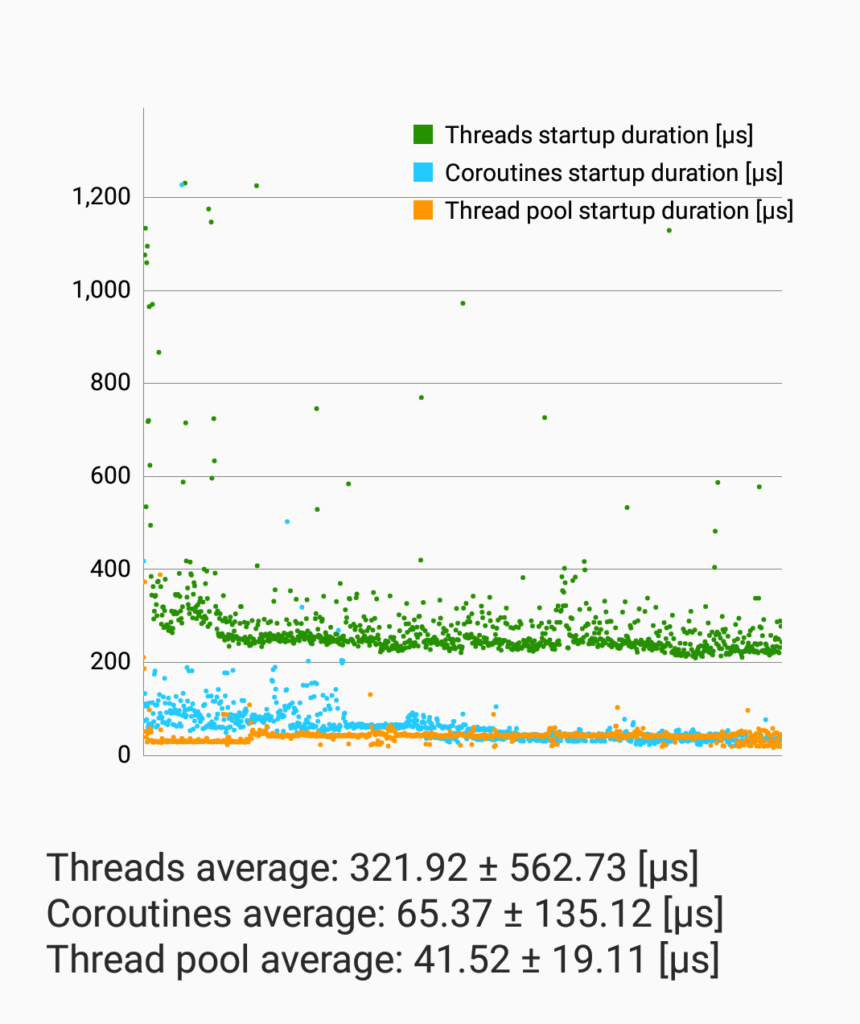

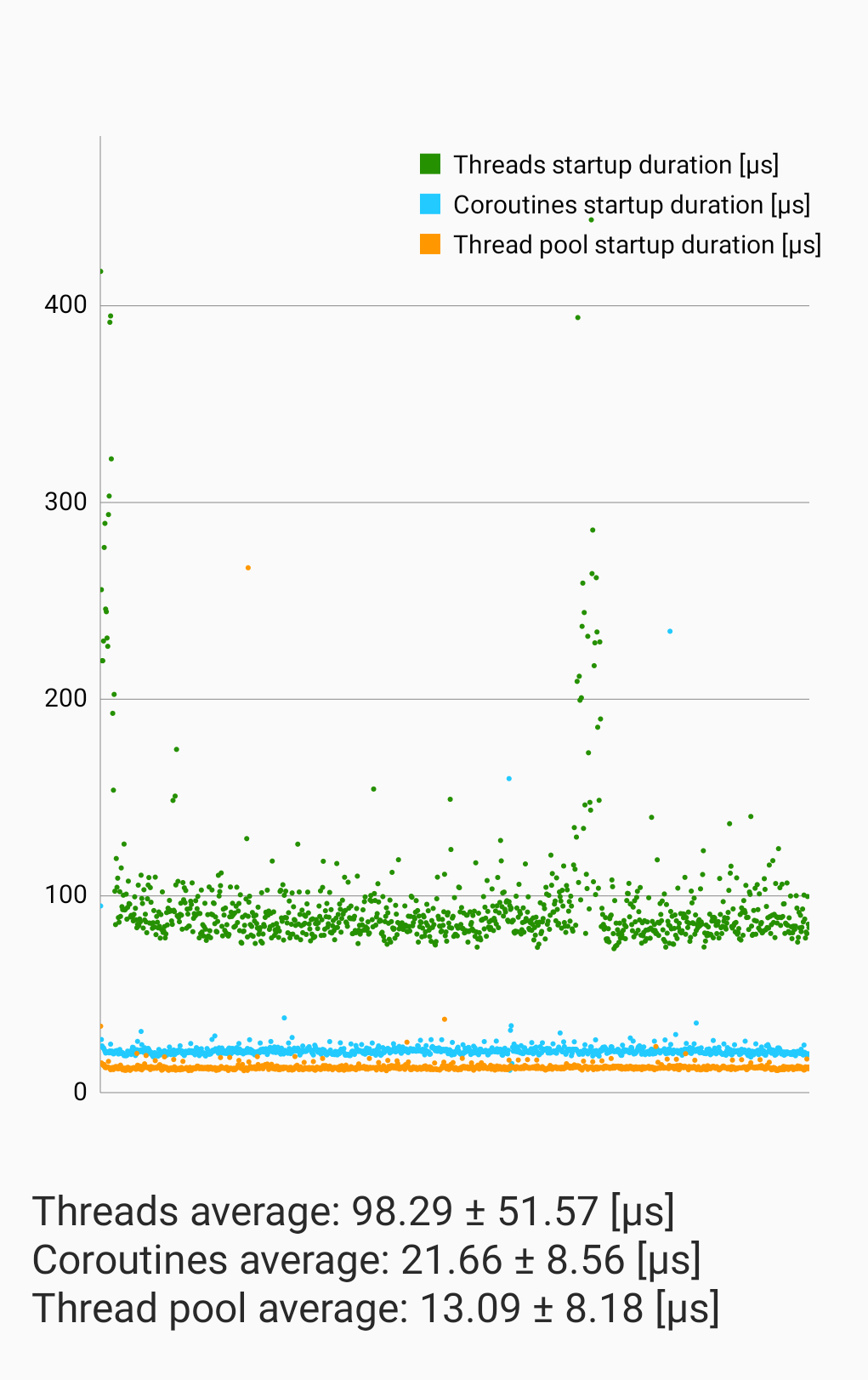

I ran the benchmark on two devices:

- Samsung Galaxy S7, which is the oldest device in my possession which is capable of running the TechYourChance app.

- Samsung Galaxy S20 FE, which is my current daily driver.

Galaxy S7 is ancient and weak device, so it should provide a good approximation of the worst case performance. Galaxy S20 FE is also relatively old and relatively weak device by today’s standard, so its performance will reflect the average experience of the bottom percentiles of your users.

These are the results (please note that the chart in TechYourChance app is zoom-able):

Results Discussion

It shouldn’t come as a surprise that instantiating and starting a new Thread to handle each background task yields the worst performance. This approach also demonstrates the highest variance. Relatively many data points fall at 200% of the average startup duration or more.

The surprising result is that using Coroutines incurs ~50% startup time overhead compared to a standard thread pool. So, the generally accepted idea that Coroutines are faster than Threads turns out to be incorrect.

The most important observation, however, is that all these performance considerations are pretty much irrelevant in the context of Android development. Note that even on Galaxy S7, which is a dinosaur device, instantiating and starting a new Thread takes less than 1 millisecond on average, and even the slowest startups take just several milliseconds. On newer devices, everything is much faster. This overhead is negligible for absolute majority of Android applications out there. Unless your app starts hundreds of background tasks, you can happily use bare Threads for background work.

Benchmark’s Source Code Video Review

This section contains a premium content for TechYourChance members exclusively.

Conclusion

This benchmark demonstrated that Kotlin Coroutines aren’t necessarily faster than Threads. Sure, I can construct a benchmark where Coroutines will show better results, but the scenario that I tested here is actually the most common use case for Coroutines in Android applications. Therefore, in the context of Android development, I expect the average performance impact of Coroutines to be in the ballpark of the results described in this article.

In the next post, I’ll benchmark and compare the memory consumption of Coroutines and Threads.

As always, thank you for reading. If you liked this article, consider subscribing to my email list to be notified about new posts.

Comparing coroutines to threads in this manner is like comparing apples and oranges, and demonstrates a lack of understanding of their use cases and trade-offs.

Try making 10000 threads doing IO bound work and THEN compare them with coroutines. You’ll be shocked.

What I tested with this benchmark is literally the most common use case for background work in Android apps. How many apps have you seen that start 10000 concurrent background tasks (or even 100)?

Please read kotlin documentation: your 10k IO coroutines will be executed in special (for IO) thread pool with a size around 40 threads one by one. And if ~100 of your 10k IO operations won’t be fast enough, they will block all others. Coroutines are not magic. If you want to work with 10k IO tasks in 40 threads (or even in a single thread), you have to use java.nio package that uses select/epoll system calls.

Andrey this is not quite correct. The whole point of suspension mechanism in Kotlin is that many coroutines can be scheduled against a single Thread for I/O operations, since waiting threads are not doing anything productive, hence the potential gain in efficiency.

It sounds like you’ve misunderstood how coroutines are better than threads. The Kotlin docs don’t make any claims that coroutines offer better startup latency, but rather coroutines use much less memory than creating a large quantity of JVM threads.

There is literally an official Android Developers article titled Improve app performance with Kotlin Coroutines. Also, the claim that “Coroutines use much less memory…” is incorrect. The correct statement is that under very specific conditions, Coroutines can have advantages over threads. In practice, Android applications hardly ever work in these conditions, so these theoretical benefits are largely irrelvant for Android developers.

If you actually read that article, it doesn’t mention anything about improvements using coroutines over threads. It’s only referring to improving performance over entirely synchronous code.

You also claimed that this article (https://kotlinlang.org/docs/coroutines-basics.html#coroutines-are-light-weight) is false, but you don’t bother to elaborate, or cite anything?

And yet, many Android developers fully believe in the idea that Coroutines are more pefrormant than threads.

As for the other article, I didn’t reference it at all, so I couldn’t possibly claimed that it’s false.

Thank you for letting me know you couldn’t take a second to open the link. It’s a page from the Kotlin language documentation discussing the memory advantage of coroutines over threads, which you claimed was false and failed to elaborate.

Zach, you said that I claimed that the article is false. I didn’t claim that. Now you again put words in my mouth, which I didn’t say. If you want to have a discussion with me on my site, please drop such passive aggressive approach. Just ask your questions in a straight manner, and you’ll get straight answers.

The doc you linked states “Coroutines are less resource-intensive than JVM threads.”. It’s super easy to prove that this statement is wrong in general: launching one Thread is much cheaper than launching one Coroutine. While you can’t make such statement, you can say that coroutines can be less resource intensive in specific situations. Their example is one such situation, though it’s completely made up and inpractical.

So, I’ll re-iterate what I wrote earlier (please note how it’s different from the statements you attributed to me): The correct statement is that under very specific conditions, Coroutines can have advantages over threads. In practice, Android applications hardly ever work in these conditions, so these theoretical benefits are largely irrelvant for Android developers.

Your statement is hardly correct. In most cases, coroutines are faster, as their overhead is lower. Of course, you can build corner cases (as you did) that make them perform worse. But you are trying to sell that this is the norm, while it is the exception.

Also, you call “threads” the executors, which are actually as threads as the coroutine contexts are: the threads are prepared and reused, so much of the advantage relative to reusing them goes away.

Under the hood, coroutines are runnables with an additional parameter representing the continuation, running on an executor. If you are not going to make any use of the continuation, you do not need the coroutines, you are just adding overhead over runnables and fixedthreadpools (which is what you measure).

As I wrote multiple time, this benchmark represents the most common use case for coroutines and threads/thread pools in Android. I don’t get why you insist that it isn’t, after I explained to you this aspect multiple times, while not providing any other benchmark. You also don’t provide any evidence for “in most cases, coroutines are faster”.

I think we’ll just need to agree to disagree and end this lengthy discussion. Thanks for your comments.

Sure, we must agree we disagree. Indeed, I believe that spawning multiple tasks that do nothing and running them sequentially is not the most common Android use case. It is not even a use case.

The benchmark is not well designed, it provides information about launching many independent low-effort tasks. The point is not the sheer startup time, but the interaction and organization among multiple control flows. Sending a Runnable to a thread pool is just matter of calling a constructor: of course it’s fast. You should measure the cost of doing I/O, suspending and getting back control.

We recently tested, in a master thesis, the performance of coroutines, Java 21’s virtual threads, and plain old executors; and guess what? In a real-world test case, coroutines and vthreads are faster.

As I wrote in the article, I can design a benchmark that will show favorable results for Coroutines. However, it would be largely irrelevant in the context of Android development because these use cases (e.g. many concurrent IO bound tasks) rarely ever needed in Android apps.

You wrote that you teted “real world test case”. Could you elaborate how exactly your benchmark was structured?

I can do better: the thesis is publicly available at https://amslaurea.unibo.it/29647/1/thesis-main.pdf.

It’s on the JVM, not on Android.

It is not a matter of fabricating a synthetic test that looks favorable on this or that specific approach to parallelism, but using them in real cases and seeing the difference. Your test, to the best of my understanding, measures just the creation time, Runnable vs. Coroutine vs. Thread, and I find it not particularly significant, as the price you pay for threading (appropriately, let me leave starting threads manually off the scope of the discussion) is mostly a price due to synchronization and communication.

You say that concurrent I/O bound operations are rarely ever needed in Android apps, but it looks to me that many of them perform background fetching of data from the Internet, which sounds exactly like concurrent I/O bound operations 🙂

Using thread pools is the way to go in the case of many CPU-heavy independent parallel loads. When there is interaction, coroutines are normally better. By the way, I think they would also for implementing parallel operations on monadic constructs (read: parallel Java Streams), the current implementation feeds Runnables to the ForkJoinPool, so there might be a small performance edge.

I don’t have time to read the entire thesis, but, as far as I could infer, you performed parallel decomposition of some algo and benchmarked the throughput using coroutines and thread pool. This is not the typical use case in Android (and I’d even argue not a typical use case at all), and, furthermore, my benchmark measures latency, not throughput. So, these are really apples to oranges here, so the result from your thesis doesn’t contradict my result in any way.

I didn’t say that IO operations are rarely ever needed. I said that you rarely ever need many such operations. Furthrmore, even if you’d find a very exotic use case that involves something like this (e.g. fetching data from many servers in parallel), I assure you that the latency of the network would make any perf benefit you can get from using coroutines negligible. A rounding error.

So, as I said, this benchmark measures the most common use case in Android apps: offloading individual tasks to a background thread. Your thesis is not very relevant to Android development.

Your interpretation of the thesis is correct. Decomposition and parallelization are central for most types of software, Android development does not make an exception – although, of course, a simulator is much more performance-sensitive than the average Android app.

You are not measuring latency; you are measuring the spawning time. A more interesting measure would have been the overhead, which would require you to include the task closure (and, possibly, some task). Note that you can use throughput as a proxy metric for the relative overhead (under the assumption that the tasks are identical).

Just to be sure: I am not saying that your results are incorrect or that mine contradict yours; I believe that your methodology measures an aspect (spawning time) that is mostly irrelevant, and does not support the thesis that “I demonstrated that Kotlin Coroutines aren’t faster than Threads”. The thesis you are correctly supporting is “creating an object and submitting it to a pool of existing JVM threads is faster than creating coroutines and submitting them to a context”.

Danilo.

While I agree with your last comment “Using thread pools is the way to go in the case of many CPU-heavy independent parallel loads.” I think the thesis is not a good basis for the other claims. I skimmed through the text and found these issues:

1. It’s not repeatable. The source code is not available. The thesis shows only example code for various approaches, not the actual code that is running.

2. It does not analyze possible weaknesses in the test setup and it does not explain how those issues are mitigated. Using @Threads(4) can favor implementations that work better when run in parallel, for example Kotlin’s coroutines. It might be that the ForkJoin pool for Java does not handle resources as efficiently. If you allocated CPU number of threads per test execution and launched 4 test threads the tests were competing for CPU time and that caused overhead. Code execution switching between Kotlin and Java can cause additional overhead. There are many similar causes which can have an effect on the results, but there is no analysis on them.

3. I could not find any explanation in the thesis on how CPU bound tasks differ from tasks that do IO. And if the code being used in the thesis uses CPU bound tasks or does it also do IO? Ron Pressler explains how and why the different kind of limitations (CPU, IO) affect parallel processing and what is Little’s Law: https://inside.java/2020/08/07/loom-performance/

In my perspective, the thesis overlooks crucial factors that could significantly impact the results. This omission limits the generalizability of the findings, thereby weakening the foundation for drawing meaningful conclusions.

To bring more meat to the discussion I’ll chip in with my own tests.

I’ve been investigating CPU bound task parallelization ( parallel map() implementations) on the JVM with Java and Kotlin implementations. It seems that with million items very light tasks a for loop or Kotlin list.map{} or List(list.size){i -> fn(list.get(i)) have the best performance. The overhead from parallel processing exceeds the gains of doing small things in parallel. Using thread pools increases the duration from for example 34ms to 222ms (+190ms) and using newVirtualThreadExecutor increases the time to 657ms. That overhead can be significant in some cases.

With high cpu requirements per item a fixed pool of virtual threads (count = cpu core) has the best performance and fixed OS threads are on the same level. The difference might be because of slightly less overhead with Virtual Threads. Based on my tests, I would use a fixed pool of virtual threads for pmap() and avoid pmap() for light cpu bound tasks.

For both cases (very low cpu usage and high cpu usage per item), with one million items mapped in parallel with Kotlin coroutines have very high overhead. Low cpu tasks take 1,4 seconds (compared to 32ms with List(n){}). When coroutines map()s need to compete with pmap() based on a fixed thread pool, the difference is 11,6s vs 2,4s. The difference between list.map{} and coroutine based pmap()s stays the same (1,4s). I used two co-routine based implementations of my own and two from https://kt.academy/article/cc-recipes and they have the same performance. So, based on my tests, coroutines are not suitable for a high number of CPU based tasks. Not with low cpu requirements and not with high cpu requirements.

If someone wants to correct my coroutine maps() or suggest other approaches the code is in GitHub and I accept PRs: https://github.com/mikko-apo/jvm21-demo/blob/main/jvm21-demo/src/main/kotlin/fi/iki/apo/pmap/KotlinMapAlternatives.kt

I haven’t JMHized the tests yet so there might be some issues I haven’t mitigated, but the test runs produce consistent and comparable results for every run.

There are also other factors that have an effect when comparing implementations. The used programming model can have a huge effect on the probability of errors in implementation and the understandability of the code base. Understanding the constraints helps in selecting the right approach and generalization seems to be a bit risky as there are many things affecting the results.

I’ll end with a quote from Ron: Judging the merits of an implementation requires evaluating it in the context of the use-cases it seeks to optimize as well as the idiosyncratic constraints and strengths of the language/runtime it targets.

As others pointed out before, how can we be sure you understand properly how to use coroutines and implement correctly the “3 different ways” for your benchmark without seeing the code ?

Also, in real world usage, a coroutine rarely only does one job. It will start on the main thread, switch to an IO thread to get some data, switch to the default thread to compute that data, and switch back to the main thread to publish the value to the ui framework. This is where coroutines shine.

There is a link in the article to an open-sourced app that you can install, run the benchmark, review the code, etc.

I join the chorus of folks thinking you’re missing the point of coroutines and comparing apples to oranges. But for different reasons. Show me a traditional multi-threaded client app and I will show you callback hell with a dozen race conditions. Coroutines and structured concurrency, when used idiomatically should vastly reduce the complexity of your application.

Consider: On Android — or any client platform — the key scenario for multi-tasking is asynchronous I/O. That I/O will vastly dwarf any of the costs you’re talking about here. It simply isn’t relevant, in common Android scenarios, to consider these costs.

Ironically, this analysis might be more compelling when talking about server-side coroutines, but then you’d need to completely change your methodology — being careful not just about platforms but also configurations (e.g. what dispatcher / threading model you’re using).

The myth you’re attempting to dispel isn’t one that is widely held, at least in my corner of the industry.

I’m not sure why people are getting so angry in this thread. I for one would just wanna thank Vasiliy for putting HIS point on HIS platform and allowing us to put our brain cells to use.

People are welcome to disagree, but please do it in a polite manner. You don’t have to be a jerk about it.

Thanks pal, I really appreciate that.

I couldn’t understand what prompted so many “you know nothing, John Snow” comments. There is an open-source benchmark, so, if people disagree, they’re more than welcome to propose alternative implementation, instead of writing angry comments. Well, at least that what I’d do.

The post and the benchmark demonstrate that the author does not understand the underlying concurrency models he is comparing, and when people are pointing this out in the comments he is getting defensive.

It’s okay, concurrency is hard. Keep at it.

Thanks mate, appreciate your encourangement. I thought that being the author of the most comprehensive course about concurrency in Android and one of the most comprehensive courses about Coroutines specifically gives me a bit of credibility, but, obviously, I need much more experience to get to your level. I’ll keep at it.

I thought I’d have Bard take a shot at summarizing the comments after reading the (great article).

Here is the initial draft:

https://g.co/bard/share/8daf9ef72b64

Then, a second draft:

https://g.co/bard/share/77e8793d29bd

I know it’s a little off track but this AI thing is consuming me.

Not bad at all!

Startup latency is not really affecting performance in any way. What really affects the performance is thread priority. While you can easily set priority of thread and call it a day, you never know on which thread coroutine will be executed, because it can switch the thread during execution (by definition) and it’s abstracted out, also you can not set priority of coroutine (issue #1617). So for computation task where performance matters, the better choice is of course a thread with highest priority. It’s actually in android docs ”Every time you create a thread, you should call setThreadPriority()”.

A while back I happened to ran a simple Kotlin coroutines vs pure threads inside a loop of I think a couple hundred or thousands of simple one statement iterations on Android. Guess what I was surprised the threads ran faster than the coroutines. I still prefer coroutines for it’s control structure though.

Question, did you benchmark coroutines scheduled atop a pool of threads… ?

ah, that article really hit the nail on the head… it’s like a bucket of cold water on the face whenever there’s a hype train going around, especially with claims of “better performance”… it’s always good to have that dose of skepticism, and nothing beats rolling up our sleeves and testing things in our own sandbox… that’s where the rubber meets the road…

i also like how the article subtly reminds us that choosing coroutines over threads isn’t always about chasing performance numbers… coroutines have this elegant way of making async code read like it’s sync, which is… kind of liberating… it takes away a lot of the headache that comes with callback hell, making the code more readable and, well, sane… it’s like choosing a less bumpy road even if it’s not the shortest… and sometimes, that makes the journey more enjoyable and less of a chore…

the difference in performance, as shown, isn’t something that would keep me up at night unless i’m dealing with some ultra high concurrency scenario where milliseconds are gold… it’s a good reminder that there’s more to our tech choices than just raw performance… sometimes it’s about writing code that we, or the poor soul who inherits our codebase, can read without pulling their hair out… and that, in the long run, might just be worth its weight in gold…